In this blog post, I want to share with you what I learned about treating PMDD using articles summarization through TextRank. TextRank is not really a summarization algorithm, it is used for extracting top sentences, but I decided to use it anyways and see the results. I started by using the googlesearch library in python to search for “PMDD treatments – calcium, hormones, SSRIs, scientific evidence”. The search resulted in a list of URLs to various articles on PMDD treatments. However, not all of them were useful for my purposes, as some were blocked due to access restrictions. I used BeautifulSoup to extract the text from the remaining articles.

In order to exclude irrelevant paragraphs, I used the library called Justext. This library is designed for removing boilerplate content and other non-relevant text from HTML pages. Justext uses a heuristics to determine which parts of the page are boilerplate and which are not, and then filters out the former. Justext tries to identify these sections by analyzing the length of the text, the density of links, and the presence of certain HTML tags.

Some examples of the kinds of content that Justext can remove include navigation menus, copyright statements, disclaimers, and other non-content-related text. It does not work perfectly, as I still ended up with sentences such as the following in the resulting articles: “This content is owned by the AAFP. A person viewing it online may make one printout of the material and may use that printout only for his or her personal, non-commercial reference.”

Next, I used existing code that implements the TextRank algorithm that I found online. I slightly improved it so that instead of bag of words method the algorithm would use sentence embeddings. Let’s go step by step through the algorithm. I defined a class called TextRank4Sentences. Here is a description of each line in the __init__ method of this class:

self.damping = 0.85: This sets the damping coefficient used in the TextRank algorithm to 0.85. In this case, it determines the probability of the algorithm to transition from one sentence to another.

self.min_diff = 1e-5: This sets the convergence threshold. The algorithm will stop iterating when the difference between the PageRank scores of two consecutive iterations is less than this value.

self.steps = 100: This sets the number of iterations to run the algorithm before stopping.

self.text_str = None: This initializes a variable to store the input text.

self.sentences = None: This initializes a variable to store the individual sentences of the input text.

self.pr_vector = None: This initializes a variable to store the TextRank scores for each sentence in the input text.

from nltk import sent_tokenize, word_tokenize

from nltk.cluster.util import cosine_distance

from sklearn.metrics.pairwise import cosine_similarity

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('distilbert-base-nli-stsb-mean-tokens')

MULTIPLE_WHITESPACE_PATTERN = re.compile(r"\s+", re.UNICODE)

class TextRank4Sentences():

def __init__(self):

self.damping = 0.85 # damping coefficient, usually is .85

self.min_diff = 1e-5 # convergence threshold

self.steps = 100 # iteration steps

self.text_str = None

self.sentences = None

self.pr_vector = None

The next step is defining a private method _sentence_similarity() which takes in two sentences and returns their cosine similarity using a pre-trained model. The method encodes each sentence into a vector using the pre-trained model and then calculates the cosine similarity between the two vectors using another function core_cosine_similarity().

core_cosine_similarity() is a separate function that measures the cosine similarity between two vectors. It takes in two vectors as inputs and returns a similarity score between 0 and 1. The function uses the cosine_similarity() function from the sklearn library to calculate the similarity score. The cosine similarity is a measure of the similarity between two non-zero vectors of an inner product space. It is calculated as the cosine of the angle between the two vectors.

Mathematically, given two vectors u and v, the cosine similarity is defined as:

cosine_similarity(u, v) = (u . v) / (||u|| ||v||)

where u . v is the dot product of u and v, and ||u|| and ||v|| are the magnitudes of u and v respectively.

def core_cosine_similarity(vector1, vector2):

"""

measure cosine similarity between two vectors

:param vector1:

:param vector2:

:return: 0 < cosine similarity value < 1

"""

sim_score = cosine_similarity(vector1, vector2)

return sim_score

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

first_sent_embedding = model.encode([sent1])

second_sent_embedding = model.encode([sent2])

return core_cosine_similarity(first_sent_embedding, second_sent_embedding)

In the next function, the similarity matrix is built for the given sentences. The function _build_similarity_matrix takes a list of sentences as input and creates an empty similarity matrix sm with dimensions len(sentences) x len(sentences). Then, for each sentence in the list, the function computes its similarity with all other sentences in the list using the _sentence_similarity function. After calculating the similarity scores for all sentence pairs, the function get_symmetric_matrix is used to make the similarity matrix symmetric.

The function get_symmetric_matrix adds the transpose of the matrix to itself, and then subtracts the diagonal elements of the original matrix. In other words, for each element (i, j) of the input matrix, the corresponding element (j, i) is added to it to make it symmetric. However, the diagonal elements (i, i) of the original matrix are not added twice, so they need to be subtracted once from the sum of the corresponding elements in the upper and lower triangles. The resulting matrix has the same values in the upper and lower triangles, and is symmetric along its main diagonal. The similarity matrix is made symmetric in order to ensure that the similarity score between two sentences in the matrix is the same regardless of their order, and it also simplifies the computation.

def get_symmetric_matrix(matrix):

"""

Get Symmetric matrix

:param matrix:

:return: matrix

"""

return matrix + matrix.T - np.diag(matrix.diagonal())

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

...

def _build_similarity_matrix(self, sentences, stopwords=None):

# create an empty similarity matrix

sm = np.zeros([len(sentences), len(sentences)])

for idx, sentence in enumerate(sentences):

print("Current location: %d" % idx)

sm[idx] = self._sentence_similarity(sentence, sentences)

# Get Symmeric matrix

sm = get_symmetric_matrix(sm)

# Normalize matrix by column

norm = np.sum(sm, axis=0)

sm_norm = np.divide(sm, norm, where=norm != 0) # this is ignore the 0 element in norm

return sm_norm

In the next function, the ranking algorithm PageRank is implemented to calculate the importance of each sentence in the document. The similarity matrix created in the previous step is used as the basis for the PageRank algorithm. The function takes the similarity matrix as input and initializes the pagerank vector with a value of 1 for each sentence.

In each iteration, the pagerank vector is updated based on the similarity matrix and damping coefficient. The damping coefficient represents the probability of continuing to another sentence at random, rather than following a link from the current sentence. The algorithm continues to iterate until either the maximum number of steps is reached or the difference between the current and previous pagerank vector is less than a threshold value. Finally, the function returns the pagerank vector, which represents the importance score for each sentence.

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

...

def _build_similarity_matrix(self, sentences, stopwords=None):

...

def _run_page_rank(self, similarity_matrix):

pr_vector = np.array([1] * len(similarity_matrix))

# Iteration

previous_pr = 0

for epoch in range(self.steps):

pr_vector = (1 - self.damping) + self.damping * np.matmul(similarity_matrix, pr_vector)

if abs(previous_pr - sum(pr_vector)) < self.min_diff:

break

else:

previous_pr = sum(pr_vector)

return pr_vector

The _get_sentence function takes an index as input and returns the corresponding sentence from the list of sentences. If the index is out of range, it returns an empty string. This function is used later in the class to get the highest ranked sentences.

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

...

def _build_similarity_matrix(self, sentences, stopwords=None):

...

def _run_page_rank(self, similarity_matrix):

...

def _get_sentence(self, index):

try:

return self.sentences[index]

except IndexError:

return ""

The code then defines a method called get_top_sentences which returns a summary of the most important sentences in a document. The method takes two optional arguments: number (default=5) specifies the maximum number of sentences to include in the summary, and similarity_threshold (default=0.5) specifies the minimum similarity score between two sentences that should be considered “too similar” to include in the summary.

The method first initializes an empty list called top_sentences to hold the selected sentences. It then checks if a pr_vector attribute has been computed for the document. If the pr_vector exists, it sorts the indices of the sentences in descending order based on their PageRank scores and saves them in the sorted_pr variable.

It then iterates through the sentences in sorted_pr, starting from the one with the highest PageRank score. For each sentence, it removes any extra whitespace, replaces newlines with spaces, and checks if it is too similar to any of the sentences already selected for the summary. If it is not too similar, it adds the sentence to top_sentences. Once the selected sentences are finalized, the method concatenates them into a single string separated by spaces, and returns the summary.

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

...

def _build_similarity_matrix(self, sentences, stopwords=None):

...

def _run_page_rank(self, similarity_matrix):

...

def _get_sentence(self, index):

...

def get_top_sentences(self, number=5, similarity_threshold=0.5):

top_sentences = []

if self.pr_vector is not None:

sorted_pr = np.argsort(self.pr_vector)

sorted_pr = list(sorted_pr)

sorted_pr.reverse()

index = 0

while len(top_sentences) < number and index < len(sorted_pr):

sent = self.sentences[sorted_pr[index]]

sent = normalize_whitespace(sent)

sent = sent.replace('\n', ' ')

# Check if the sentence is too similar to any of the sentences already in top_sentences

is_similar = False

for s in top_sentences:

sim = self._sentence_similarity(sent, s)

if sim > similarity_threshold:

is_similar = True

break

if not is_similar:

top_sentences.append(sent)

index += 1

summary = ' '.join(top_sentences)

return summary

The _remove_duplicates method takes a list of sentences as input and returns a list of unique sentences, by removing any duplicates in the input list.

class TextRank4Sentences():

def __init__(self):

...

def _sentence_similarity(self, sent1, sent2):

...

def _build_similarity_matrix(self, sentences, stopwords=None):

...

def _run_page_rank(self, similarity_matrix):

...

def _get_sentence(self, index):

...

def get_top_sentences(self, number=5, similarity_threshold=0.5):

...

def _remove_duplicates(self, sentences):

seen = set()

unique_sentences = []

for sentence in sentences:

if sentence not in seen:

seen.add(sentence)

unique_sentences.append(sentence)

return unique_sentences

The analyze method takes a string text and a list of stop words stop_words as input. It first creates a unique list of words from the input text by using the set() method and then joins these words into a single string self.full_text.

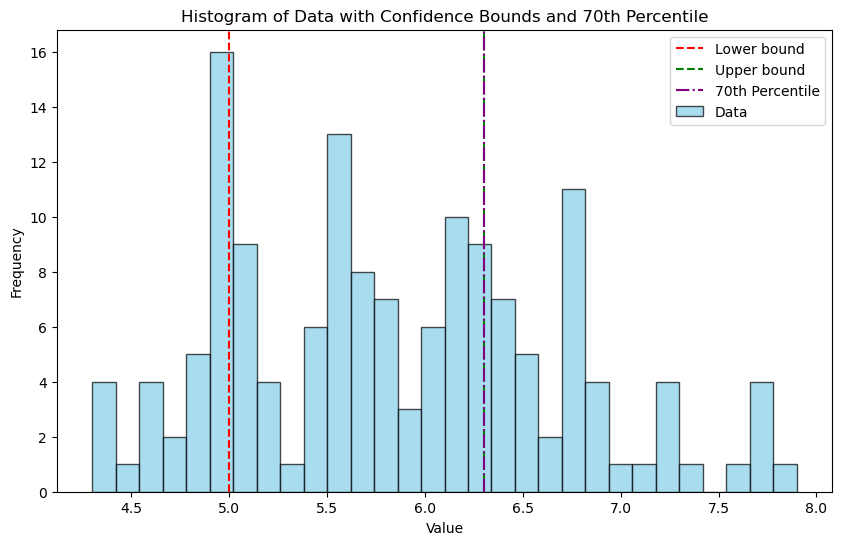

It then uses the sent_tokenize() method from the nltk library to tokenize the text into sentences and removes duplicate sentences using the _remove_duplicates() method. It also removes sentences that have a word count less than or equal to the fifth percentile of all sentence lengths.

After that, the method calculates a similarity matrix using the _build_similarity_matrix() method, passing in the preprocessed list of sentences and the stop_words list.

Finally, it runs the PageRank algorithm on the similarity matrix using the _run_page_rank() method to obtain a ranking of the sentences based on their importance in the text. This ranking is stored in self.pr_vector.

class TextRank4Sentences():

...

def analyze(self, text, stop_words=None):

self.text_unique = list(set(text))

self.full_text = ' '.join(self.text_unique)

#self.full_text = self.full_text.replace('\n', ' ')

self.sentences = sent_tokenize(self.full_text)

# for i in range(len(self.sentences)):

# self.sentences[i] = re.sub(r'[^\w\s$]', '', self.sentences[i])

self.sentences = self._remove_duplicates(self.sentences)

sent_lengths = [len(sent.split()) for sent in self.sentences]

fifth_percentile = np.percentile(sent_lengths, 10)

self.sentences = [sentence for sentence in self.sentences if len(sentence.split()) > fifth_percentile]

print("Min length: %d, Total number of sentences: %d" % (fifth_percentile, len(self.sentences)) )

similarity_matrix = self._build_similarity_matrix(self.sentences, stop_words)

self.pr_vector = self._run_page_rank(similarity_matrix)

In order to find articles, I used the googlesearch library. The code below performs a Google search using the Google Search API provided by the library. It searches for the query “PMDD treatments – calcium, hormones, SSRIs, scientific evidence” and retrieves the top 7 search results.

# summarize articles

import requests

from bs4 import BeautifulSoup

from googlesearch import search

import justext

query = "PMDD treatments - calcium, hormones, SSRIs, scientific evidence"

# perform the google search and retrieve the top 5 search results

top_results = []

for url in search(query, num_results=7):

top_results.append(url)

In the next part, the code extracts the article text for each of the top search results collected in the previous step. For each URL in the top_results list, the code sends an HTTP GET request to the URL using the requests library. It then uses the justext library to extract the main content of the webpage by removing any boilerplate text (i.e., non-content text).

article_texts = []

# extract the article text for each of the top search results

for url in top_results:

response = requests.get(url)

paragraphs = justext.justext(response.content, justext.get_stoplist("English"))

text = ''

for paragraph in paragraphs:

if not paragraph.is_boilerplate:

text += paragraph.text + '\n'

if "Your access to PubMed Central has been blocked" not in text:

article_texts.append(text.strip())

print(text)

print('-' * 50)

print("Total articles collected: %d" % len(article_texts))

In the final step, the extracted article texts are passed to an instance of the TextRank4Sentences class, which is used to perform text summarization. The output of get_top_sentences() is a list of the top-ranked sentences in the input text, which are considered to be the most important and representative sentences for summarizing the content of the text. This list is stored in the variable summary_text.

# summarize

tr4sh = TextRank4Sentences()

tr4sh.analyze(article_texts)

summary_text = tr4sh.get_top_sentences(15)

Results:

(I did not list irrelevant sentences that appeared in the final results, such as “You will then receive an email that contains a secure link for resetting your password…“)

Total articles collected: 6

There have been at least 15 randomized controlled trials of the use of selective serotonin-reuptake inhibitors (SSRIs) for the treatment of severe premenstrual syndrome (PMS), also called premenstrual dysphoric disorder (PMDD).

It is possible that the irritability/anger/mood swings subtype of PMDD is differentially responsive to treatments that lead to a quick change in ALLO availability or function, for example, symptom-onset SSRI or dutasteride.

* My note: ALLO is allopregnanolone

* My note: Dutasteride is a synthetic 4-azasteroid compound that is a selective inhibitor of both the type 1 and type 2 isoforms of steroid 5 alpha-reductase

From 2 to 10 percent of women of reproductive age have severe distress and dysfunction caused by premenstrual dysphoric disorder, a severe form of premenstrual syndrome.

The rapid efficacy of selective serotonin reuptake inhibitors (SSRIs) in PMDD may be due in part to their ability to increase ALLO levels in the brain and enhance GABAA receptor function with a resulting decrease in anxiety.

Clomipramine, a serotoninergic tricyclic antidepressant that affects the noradrenergic system, in a dosage of 25 to 75 mg per day used during the full cycle or intermittently during the luteal phase, significantly reduced the total symptom complex of PMDD.

Relapse was more likely if a woman stopped sertraline after only 4 months versus 1 year, if she had more severe symptoms prior to treatment and if she had not achieved full symptom remission with sertraline prior to discontinuation.

Women with negative views of themselves and the future caused or exacerbated by PMDD may benefit from cognitive-behavioral therapy. This kind of therapy can enhance self-esteem and interpersonal effectiveness, as well as reduce other symptoms.

Educating patients and their families about the disorder can promote understanding of it and reduce conflict, stress, and symptoms.

Anovulation can also be achieved with the administration of estrogen (transdermal patch, gel, or implant).

In a recent meta-analysis of 15 randomized, placebo-controlled studies of the efficacy of SSRIs in PMDD, it was concluded that SSRIs are an effective and safe first-line therapy and that there is no significant difference in symptom reduction between continuous and intermittent dosing.

Preliminary confirmation of alleviation of PMDD with suppression of ovulation with a GnRH agonist should be obtained prior to hysterectomy.

Sexual side effects, such as reduced libido and inability to reach orgasm, can be troubling and persistent, however, even when dosing is intermittent. * My note: I think this sentence refers to the side-effects of SSRIs